Modèles scikit-learn¶

import sklearn

print(f"scikit-learn version: {sklearn.__version__}")

assert sklearn.__version__ >= "0.20"

from scipy.special import softmax

from sklearn.model_selection import train_test_split

from sklearn.linear_model import (

LinearRegression,

LogisticRegression,

SGDRegressor,

Ridge,

SGDClassifier

)

from sklearn.model_selection import GridSearchCV

from sklearn.neural_network import MLPClassifier

from sklearn.preprocessing import PolynomialFeatures, StandardScaler

from sklearn.pipeline import Pipeline

from sklearn.tree import (

DecisionTreeClassifier,

DecisionTreeRegressor,

plot_tree,

export_graphviz

)

from sklearn.metrics import classification_report, mean_squared_error, plot_roc_curve

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

Créer un modèle¶

# Régression linéaire (Normal Equation)

model = LinearRegression()

# Régression Linéaire (Descente de gradient)

model = SGDRegressor()

# Régression logisitique (Descente de gradient)

model = SGDClassifier(loss="log") # log loss = binary crossentropy

# Softmax regression

model = LogisticRegression(multi_class="multinomial")

# --> s = softmax(scores)

# Decision tree classifier

model_tree = DecisionTreeClassifier(max_depth=2, random_state=42)

# Decision tree regression

model = DecisionTreeRegressor(max_depth=2)

# Random forest

model = RandomForestClassifier(n_estimators=200)

# Multilayer perceptron

model = MLPClassifier()

Entraîner le modèle¶

---------------------------------------------------------------------------

NotFittedError Traceback (most recent call last)

<ipython-input-7-5e1517ccce5d> in <module>

7 filled=True,

8 rounded=True,

----> 9 special_characters=True,

10 )

11 graphviz.Source(dot_data)

~/python3.7/lib/python3.7/site-packages/sklearn/utils/validation.py in inner_f(*args, **kwargs)

70 FutureWarning)

71 kwargs.update({k: arg for k, arg in zip(sig.parameters, args)})

---> 72 return f(**kwargs)

73 return inner_f

74

~/python3.7/lib/python3.7/site-packages/sklearn/tree/_export.py in export_graphviz(decision_tree, out_file, max_depth, feature_names, class_names, label, filled, leaves_parallel, impurity, node_ids, proportion, rotate, rounded, special_characters, precision)

762 """

763

--> 764 check_is_fitted(decision_tree)

765 own_file = False

766 return_string = False

~/python3.7/lib/python3.7/site-packages/sklearn/utils/validation.py in inner_f(*args, **kwargs)

70 FutureWarning)

71 kwargs.update({k: arg for k, arg in zip(sig.parameters, args)})

---> 72 return f(**kwargs)

73 return inner_f

74

~/python3.7/lib/python3.7/site-packages/sklearn/utils/validation.py in check_is_fitted(estimator, attributes, msg, all_or_any)

1017

1018 if not attrs:

-> 1019 raise NotFittedError(msg % {'name': type(estimator).__name__})

1020

1021

NotFittedError: This DecisionTreeClassifier instance is not fitted yet. Call 'fit' with appropriate arguments before using this estimator.

Il est bon ou pas ?¶

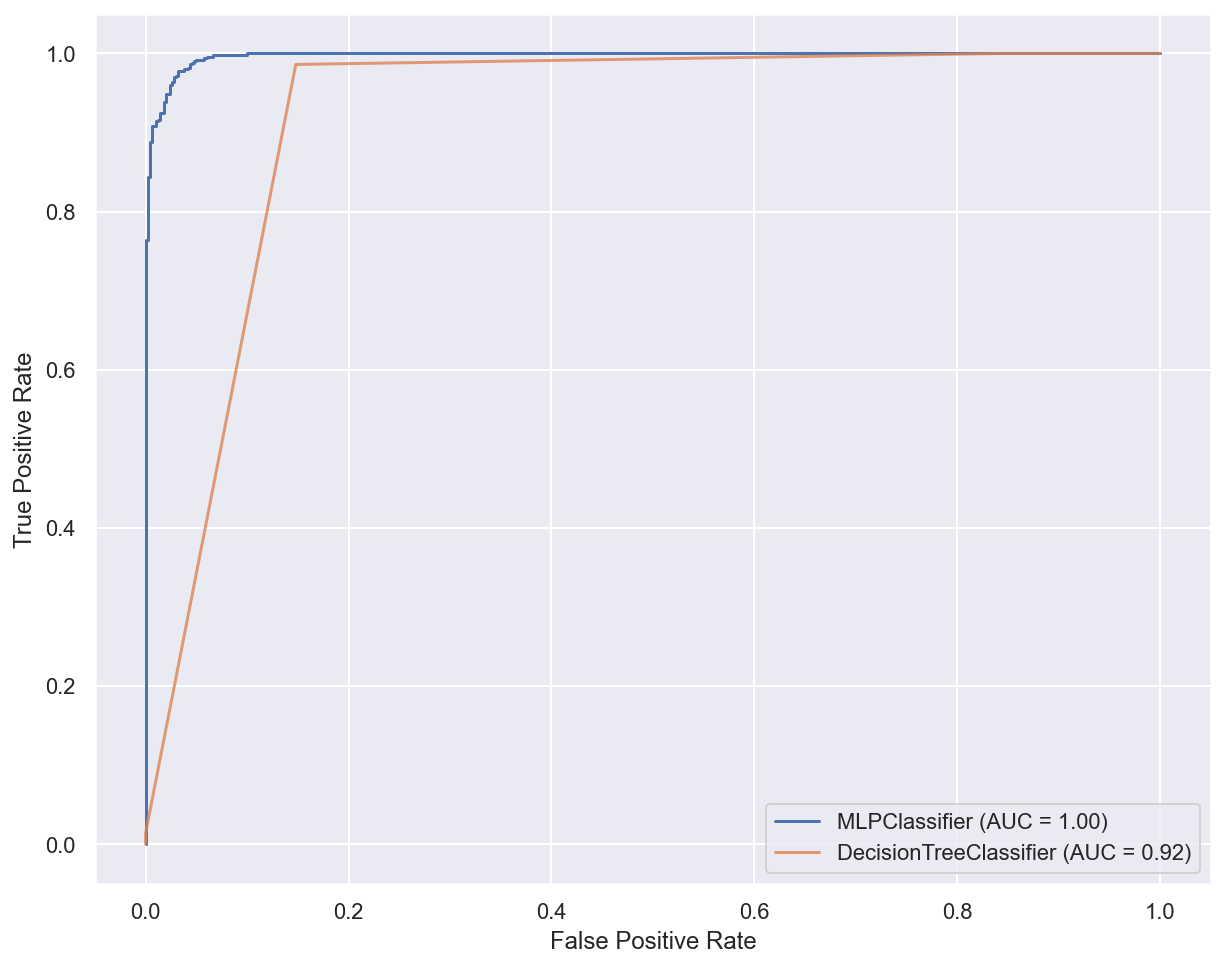

Comparer¶

Tuner un modèle (Gridsearch)¶

# récupérer les paramètres

estimator = DecisionTreeClassifier()

estimator.get_params().keys()

parameters = [{'max_depth': np.arange(1, 21),

'min_samples_leaf': [1, 5, 10, 20, 50, 100]}]

grid_search_cv = GridSearchCV(estimator, parameters)

# Search for the best parameters with the specified classifier on training data

grid_search_cv.fit(x_train, y_train)

# Print the best combination of hyperparameters found

print(grid_search_cv.best_params_)